diff --git a/README.md b/README.md

index b40282a..831ef71 100644

--- a/README.md

+++ b/README.md

@@ -1,8 +1,13 @@

+> **Note**

+>

+> 本项目依赖的Gradio组件的新版pip包(Gradio 3.26~3.27)有严重bug。所以,请在安装时严格选择requirements.txt中**指定的版本**。

+>

+> `pip install -r requirements.txt -i https://mirrors.aliyun.com/pypi/simple/`

+>

+#  GPT 学术优化 (ChatGPT Academic)

-#

GPT 学术优化 (ChatGPT Academic)

-#  ChatGPT 学术优化

-

-**如果喜欢这个项目,请给它一个Star;如果你发明了更好用的快捷键或函数插件,欢迎发issue或者pull requests**

+**如果喜欢这个项目,请给它一个Star;如果你发明了更好用的快捷键或函数插件,欢迎发pull requests**

If you like this project, please give it a Star. If you've come up with more useful academic shortcuts or functional plugins, feel free to open an issue or pull request. We also have a README in [English|](docs/README_EN.md)[日本語|](docs/README_JP.md)[Русский|](docs/README_RS.md)[Français](docs/README_FR.md) translated by this project itself.

@@ -20,25 +25,25 @@ If you like this project, please give it a Star. If you've come up with more use

--- | ---

一键润色 | 支持一键润色、一键查找论文语法错误

一键中英互译 | 一键中英互译

-一键代码解释 | 可以正确显示代码、解释代码

+一键代码解释 | 显示代码、解释代码、生成代码、给代码加注释

[自定义快捷键](https://www.bilibili.com/video/BV14s4y1E7jN) | 支持自定义快捷键

-[配置代理服务器](https://www.bilibili.com/video/BV1rc411W7Dr) | 支持代理连接OpenAI/Google等,秒解锁ChatGPT互联网[实时信息聚合](https://www.bilibili.com/video/BV1om4y127ck/)能力

模块化设计 | 支持自定义强大的[函数插件](https://github.com/binary-husky/chatgpt_academic/tree/master/crazy_functions),插件支持[热更新](https://github.com/binary-husky/chatgpt_academic/wiki/%E5%87%BD%E6%95%B0%E6%8F%92%E4%BB%B6%E6%8C%87%E5%8D%97)

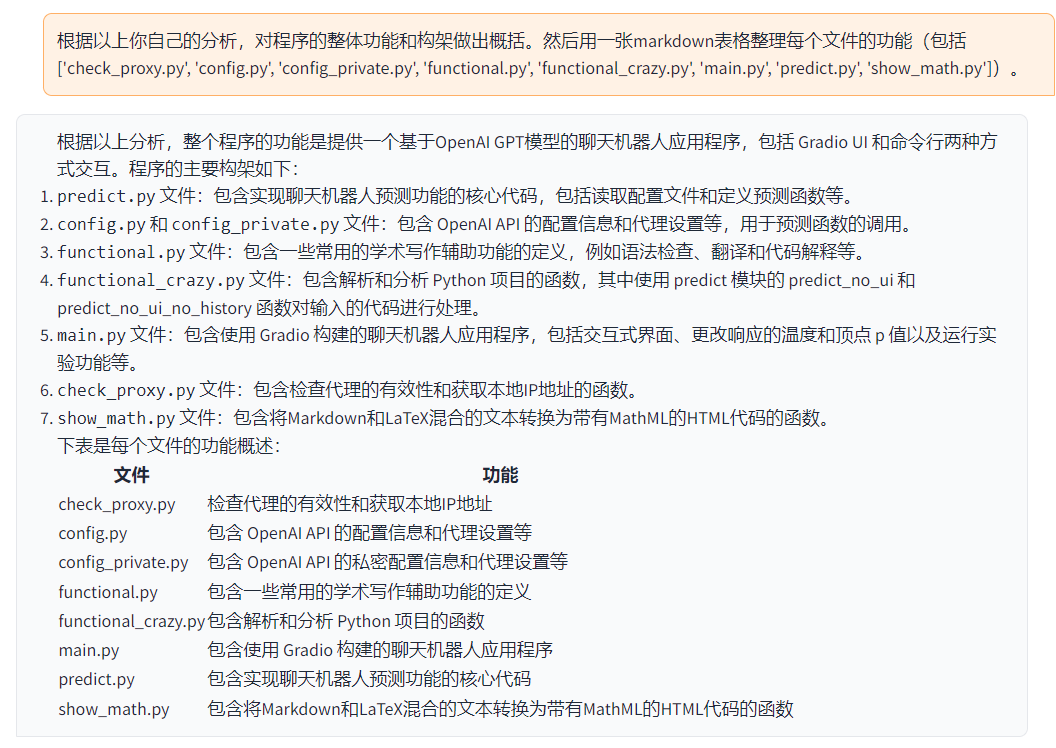

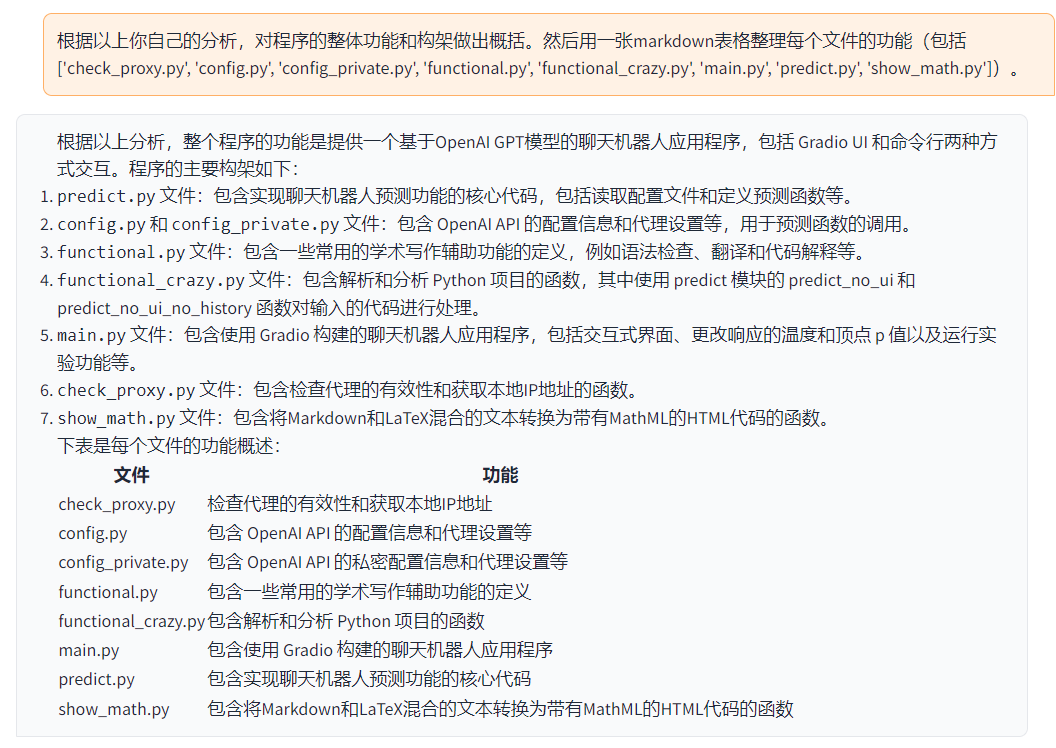

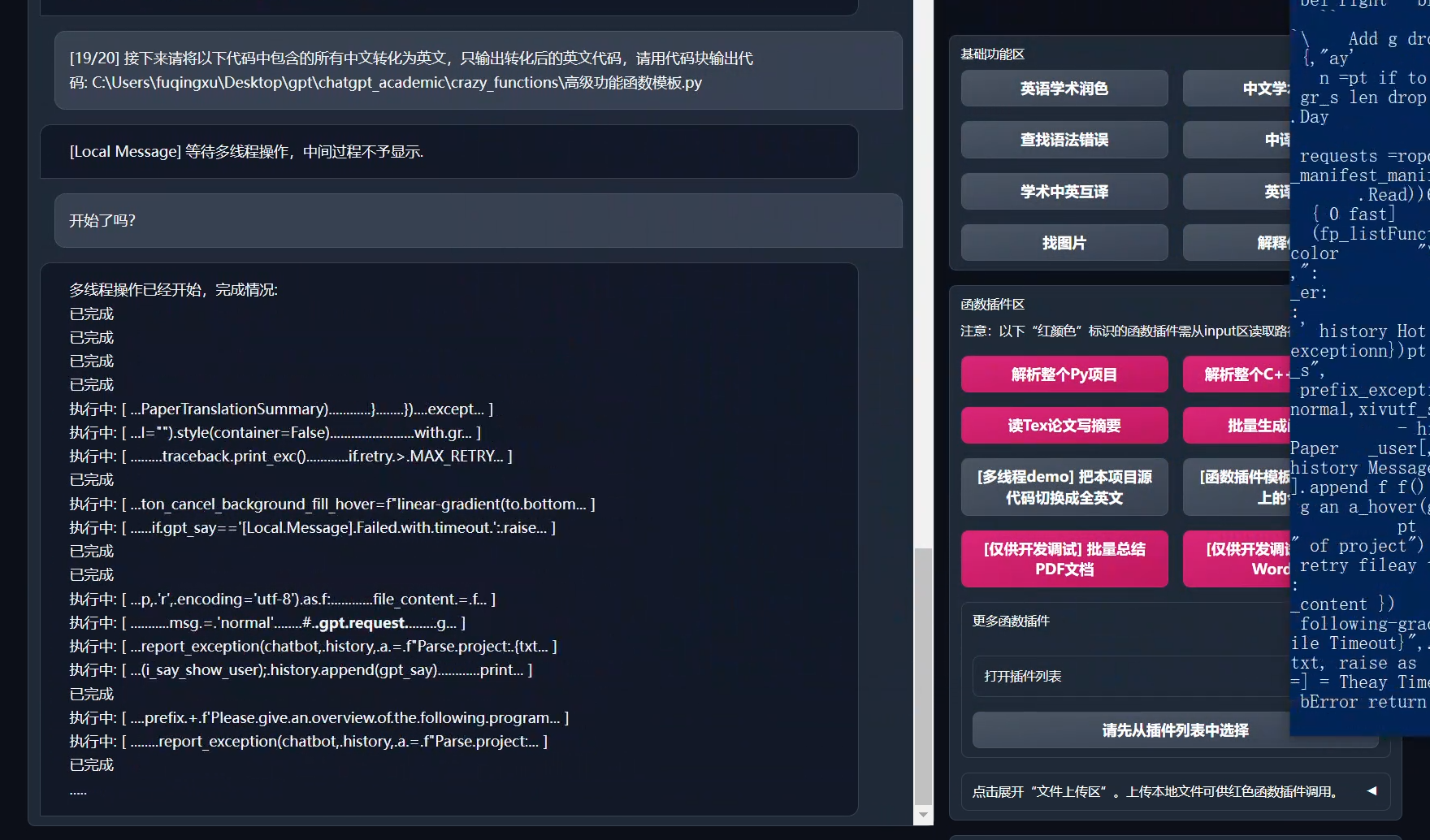

[自我程序剖析](https://www.bilibili.com/video/BV1cj411A7VW) | [函数插件] [一键读懂](https://github.com/binary-husky/chatgpt_academic/wiki/chatgpt-academic%E9%A1%B9%E7%9B%AE%E8%87%AA%E8%AF%91%E8%A7%A3%E6%8A%A5%E5%91%8A)本项目的源代码

[程序剖析](https://www.bilibili.com/video/BV1cj411A7VW) | [函数插件] 一键可以剖析其他Python/C/C++/Java/Lua/...项目树

-读论文 | [函数插件] 一键解读latex论文全文并生成摘要

+读论文、翻译论文 | [函数插件] 一键解读latex/pdf论文全文并生成摘要

Latex全文[翻译](https://www.bilibili.com/video/BV1nk4y1Y7Js/)、[润色](https://www.bilibili.com/video/BV1FT411H7c5/) | [函数插件] 一键翻译或润色latex论文

批量注释生成 | [函数插件] 一键批量生成函数注释

-chat分析报告生成 | [函数插件] 运行后自动生成总结汇报

Markdown[中英互译](https://www.bilibili.com/video/BV1yo4y157jV/) | [函数插件] 看到上面5种语言的[README](https://github.com/binary-husky/chatgpt_academic/blob/master/docs/README_EN.md)了吗?

-[arxiv小助手](https://www.bilibili.com/video/BV1LM4y1279X) | [函数插件] 输入arxiv文章url即可一键翻译摘要+下载PDF

+chat分析报告生成 | [函数插件] 运行后自动生成总结汇报

[PDF论文全文翻译功能](https://www.bilibili.com/video/BV1KT411x7Wn) | [函数插件] PDF论文提取题目&摘要+翻译全文(多线程)

+[Arxiv小助手](https://www.bilibili.com/video/BV1LM4y1279X) | [函数插件] 输入arxiv文章url即可一键翻译摘要+下载PDF

[谷歌学术统合小助手](https://www.bilibili.com/video/BV19L411U7ia) | [函数插件] 给定任意谷歌学术搜索页面URL,让gpt帮你[写relatedworks](https://www.bilibili.com/video/BV1GP411U7Az/)

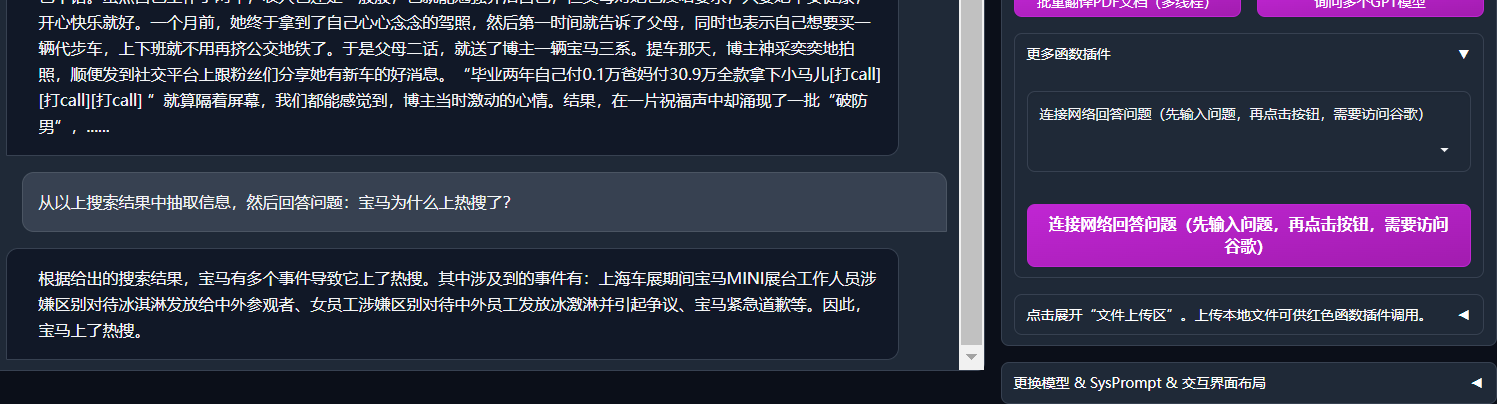

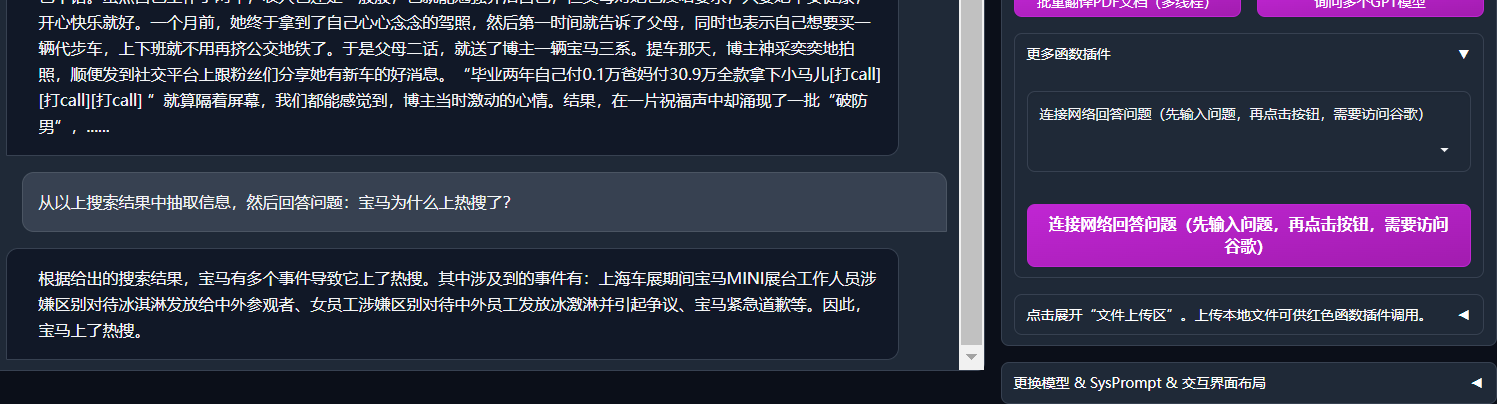

+互联网信息聚合+GPT | [函数插件] 一键让GPT先从互联网获取信息,再回答问题,让信息永不过时

公式/图片/表格显示 | 可以同时显示公式的[tex形式和渲染形式](https://user-images.githubusercontent.com/96192199/230598842-1d7fcddd-815d-40ee-af60-baf488a199df.png),支持公式、代码高亮

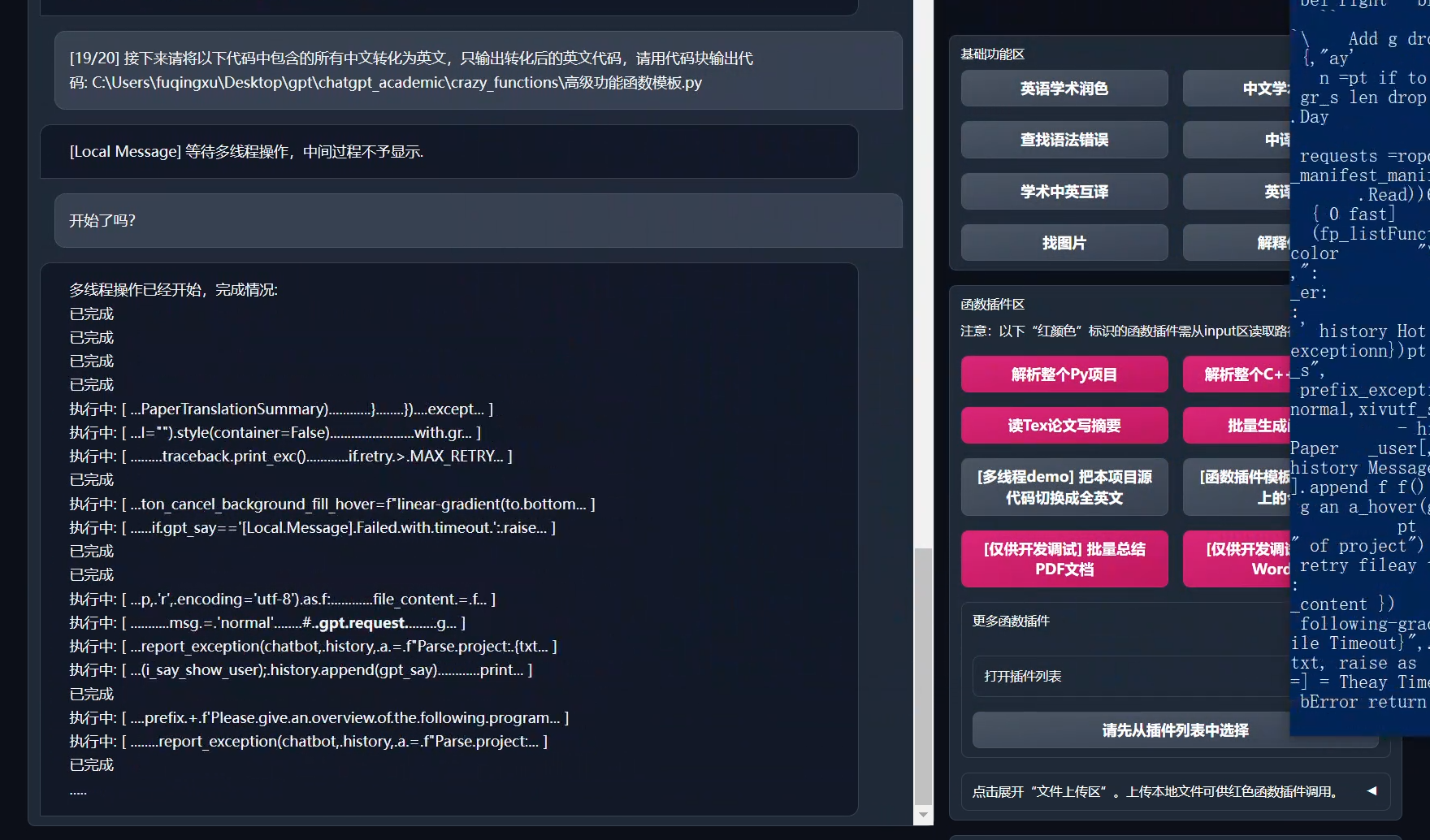

多线程函数插件支持 | 支持多线调用chatgpt,一键处理[海量文本](https://www.bilibili.com/video/BV1FT411H7c5/)或程序

启动暗色gradio[主题](https://github.com/binary-husky/chatgpt_academic/issues/173) | 在浏览器url后面添加```/?__dark-theme=true```可以切换dark主题

[多LLM模型](https://www.bilibili.com/video/BV1wT411p7yf)支持,[API2D](https://api2d.com/)接口支持 | 同时被GPT3.5、GPT4和[清华ChatGLM](https://github.com/THUDM/ChatGLM-6B)伺候的感觉一定会很不错吧?

-huggingface免科学上网[在线体验](https://huggingface.co/spaces/qingxu98/gpt-academic) | 登陆huggingface后复制[此空间](https://huggingface.co/spaces/qingxu98/gpt-academic)

+更多LLM模型接入 | 新加入Newbing测试接口(新必应AI)

…… | ……

@@ -75,9 +80,6 @@ huggingface免科学上网[在线体验](https://huggingface.co/spaces/qingxu98/

ChatGPT 学术优化

-

-**如果喜欢这个项目,请给它一个Star;如果你发明了更好用的快捷键或函数插件,欢迎发issue或者pull requests**

+**如果喜欢这个项目,请给它一个Star;如果你发明了更好用的快捷键或函数插件,欢迎发pull requests**

If you like this project, please give it a Star. If you've come up with more useful academic shortcuts or functional plugins, feel free to open an issue or pull request. We also have a README in [English|](docs/README_EN.md)[日本語|](docs/README_JP.md)[Русский|](docs/README_RS.md)[Français](docs/README_FR.md) translated by this project itself.

@@ -20,25 +25,25 @@ If you like this project, please give it a Star. If you've come up with more use

--- | ---

一键润色 | 支持一键润色、一键查找论文语法错误

一键中英互译 | 一键中英互译

-一键代码解释 | 可以正确显示代码、解释代码

+一键代码解释 | 显示代码、解释代码、生成代码、给代码加注释

[自定义快捷键](https://www.bilibili.com/video/BV14s4y1E7jN) | 支持自定义快捷键

-[配置代理服务器](https://www.bilibili.com/video/BV1rc411W7Dr) | 支持代理连接OpenAI/Google等,秒解锁ChatGPT互联网[实时信息聚合](https://www.bilibili.com/video/BV1om4y127ck/)能力

模块化设计 | 支持自定义强大的[函数插件](https://github.com/binary-husky/chatgpt_academic/tree/master/crazy_functions),插件支持[热更新](https://github.com/binary-husky/chatgpt_academic/wiki/%E5%87%BD%E6%95%B0%E6%8F%92%E4%BB%B6%E6%8C%87%E5%8D%97)

[自我程序剖析](https://www.bilibili.com/video/BV1cj411A7VW) | [函数插件] [一键读懂](https://github.com/binary-husky/chatgpt_academic/wiki/chatgpt-academic%E9%A1%B9%E7%9B%AE%E8%87%AA%E8%AF%91%E8%A7%A3%E6%8A%A5%E5%91%8A)本项目的源代码

[程序剖析](https://www.bilibili.com/video/BV1cj411A7VW) | [函数插件] 一键可以剖析其他Python/C/C++/Java/Lua/...项目树

-读论文 | [函数插件] 一键解读latex论文全文并生成摘要

+读论文、翻译论文 | [函数插件] 一键解读latex/pdf论文全文并生成摘要

Latex全文[翻译](https://www.bilibili.com/video/BV1nk4y1Y7Js/)、[润色](https://www.bilibili.com/video/BV1FT411H7c5/) | [函数插件] 一键翻译或润色latex论文

批量注释生成 | [函数插件] 一键批量生成函数注释

-chat分析报告生成 | [函数插件] 运行后自动生成总结汇报

Markdown[中英互译](https://www.bilibili.com/video/BV1yo4y157jV/) | [函数插件] 看到上面5种语言的[README](https://github.com/binary-husky/chatgpt_academic/blob/master/docs/README_EN.md)了吗?

-[arxiv小助手](https://www.bilibili.com/video/BV1LM4y1279X) | [函数插件] 输入arxiv文章url即可一键翻译摘要+下载PDF

+chat分析报告生成 | [函数插件] 运行后自动生成总结汇报

[PDF论文全文翻译功能](https://www.bilibili.com/video/BV1KT411x7Wn) | [函数插件] PDF论文提取题目&摘要+翻译全文(多线程)

+[Arxiv小助手](https://www.bilibili.com/video/BV1LM4y1279X) | [函数插件] 输入arxiv文章url即可一键翻译摘要+下载PDF

[谷歌学术统合小助手](https://www.bilibili.com/video/BV19L411U7ia) | [函数插件] 给定任意谷歌学术搜索页面URL,让gpt帮你[写relatedworks](https://www.bilibili.com/video/BV1GP411U7Az/)

+互联网信息聚合+GPT | [函数插件] 一键让GPT先从互联网获取信息,再回答问题,让信息永不过时

公式/图片/表格显示 | 可以同时显示公式的[tex形式和渲染形式](https://user-images.githubusercontent.com/96192199/230598842-1d7fcddd-815d-40ee-af60-baf488a199df.png),支持公式、代码高亮

多线程函数插件支持 | 支持多线调用chatgpt,一键处理[海量文本](https://www.bilibili.com/video/BV1FT411H7c5/)或程序

启动暗色gradio[主题](https://github.com/binary-husky/chatgpt_academic/issues/173) | 在浏览器url后面添加```/?__dark-theme=true```可以切换dark主题

[多LLM模型](https://www.bilibili.com/video/BV1wT411p7yf)支持,[API2D](https://api2d.com/)接口支持 | 同时被GPT3.5、GPT4和[清华ChatGLM](https://github.com/THUDM/ChatGLM-6B)伺候的感觉一定会很不错吧?

-huggingface免科学上网[在线体验](https://huggingface.co/spaces/qingxu98/gpt-academic) | 登陆huggingface后复制[此空间](https://huggingface.co/spaces/qingxu98/gpt-academic)

+更多LLM模型接入 | 新加入Newbing测试接口(新必应AI)

…… | ……

@@ -75,9 +80,6 @@ huggingface免科学上网[在线体验](https://huggingface.co/spaces/qingxu98/

-多种大语言模型混合调用[huggingface测试版](https://huggingface.co/spaces/qingxu98/academic-chatgpt-beta)(huggingface版不支持chatglm)

-

-

---

## 安装-方法1:直接运行 (Windows, Linux or MacOS)

@@ -88,20 +90,16 @@ git clone https://github.com/binary-husky/chatgpt_academic.git

cd chatgpt_academic

```

-2. 配置API_KEY和代理设置

+2. 配置API_KEY

+

+在`config.py`中,配置API KEY等[设置](https://github.com/binary-husky/gpt_academic/issues/1) 。

-在`config.py`中,配置 海外Proxy 和 OpenAI API KEY,说明如下

-```

-1. 如果你在国内,需要设置海外代理才能够顺利使用OpenAI API,设置方法请仔细阅读config.py(1.修改其中的USE_PROXY为True; 2.按照说明修改其中的proxies)。

-2. 配置 OpenAI API KEY。支持任意数量的OpenAI的密钥和API2D的密钥共存/负载均衡,多个KEY用英文逗号分隔即可,例如输入 API_KEY="OpenAI密钥1,API2D密钥2,OpenAI密钥3,OpenAI密钥4"

-3. 与代理网络有关的issue(网络超时、代理不起作用)汇总到 https://github.com/binary-husky/chatgpt_academic/issues/1

-```

(P.S. 程序运行时会优先检查是否存在名为`config_private.py`的私密配置文件,并用其中的配置覆盖`config.py`的同名配置。因此,如果您能理解我们的配置读取逻辑,我们强烈建议您在`config.py`旁边创建一个名为`config_private.py`的新配置文件,并把`config.py`中的配置转移(复制)到`config_private.py`中。`config_private.py`不受git管控,可以让您的隐私信息更加安全。)

3. 安装依赖

```sh

-# (选择I: 如熟悉python)推荐

+# (选择I: 如熟悉python)(python版本3.9以上,越新越好)

python -m pip install -r requirements.txt

# 备注:使用官方pip源或者阿里pip源,其他pip源(如一些大学的pip)有可能出问题,临时换源方法:python -m pip install -r requirements.txt -i https://mirrors.aliyun.com/pypi/simple/

@@ -123,14 +121,8 @@ python main.py

5. 测试函数插件

```

-- 测试Python项目分析

- (选择1)input区域 输入 `./crazy_functions/test_project/python/dqn` , 然后点击 "解析整个Python项目"

- (选择2)展开文件上传区,将python文件/包含python文件的压缩包拖拽进去,在出现反馈提示后, 然后点击 "解析整个Python项目"

-- 测试自我代码解读(本项目自译解)

- 点击 "[多线程Demo] 解析此项目本身(源码自译解)"

- 测试函数插件模板函数(要求gpt回答历史上的今天发生了什么),您可以根据此函数为模板,实现更复杂的功能

点击 "[函数插件模板Demo] 历史上的今天"

-- 函数插件区下拉菜单中有更多功能可供选择

```

## 安装-方法2:使用Docker

@@ -141,7 +133,7 @@ python main.py

# 下载项目

git clone https://github.com/binary-husky/chatgpt_academic.git

cd chatgpt_academic

-# 配置 “海外Proxy”, “API_KEY” 以及 “WEB_PORT” (例如50923) 等

+# 配置 “Proxy”, “API_KEY” 以及 “WEB_PORT” (例如50923) 等

用任意文本编辑器编辑 config.py

# 安装

docker build -t gpt-academic .

@@ -164,7 +156,6 @@ docker run --rm -it --net=host --gpus=all gpt-academic

docker run --rm -it --net=host --gpus=all gpt-academic bash

```

-

## 安装-方法3:其他部署方式(需要云服务器知识与经验)

1. 远程云服务器部署

@@ -176,14 +167,6 @@ docker run --rm -it --net=host --gpus=all gpt-academic bash

3. 如何在二级网址(如`http://localhost/subpath`)下运行

请访问[FastAPI运行说明](docs/WithFastapi.md)

-## 安装-代理配置

-1. 常规方法

-[配置代理](https://github.com/binary-husky/chatgpt_academic/issues/1)

-

-2. 纯新手教程

-[纯新手教程](https://github.com/binary-husky/chatgpt_academic/wiki/%E4%BB%A3%E7%90%86%E8%BD%AF%E4%BB%B6%E9%97%AE%E9%A2%98%E7%9A%84%E6%96%B0%E6%89%8B%E8%A7%A3%E5%86%B3%E6%96%B9%E6%B3%95%EF%BC%88%E6%96%B9%E6%B3%95%E5%8F%AA%E9%80%82%E7%94%A8%E4%BA%8E%E6%96%B0%E6%89%8B%EF%BC%89)

-

-

---

## 自定义新的便捷按钮 / 自定义函数插件

@@ -211,73 +194,11 @@ docker run --rm -it --net=host --gpus=all gpt-academic bash

详情请参考[函数插件指南](https://github.com/binary-husky/chatgpt_academic/wiki/%E5%87%BD%E6%95%B0%E6%8F%92%E4%BB%B6%E6%8C%87%E5%8D%97)。

----

-

-## 部分功能展示

-

-1. 图片显示:

-

-

-多种大语言模型混合调用[huggingface测试版](https://huggingface.co/spaces/qingxu98/academic-chatgpt-beta)(huggingface版不支持chatglm)

-

-

---

## 安装-方法1:直接运行 (Windows, Linux or MacOS)

@@ -88,20 +90,16 @@ git clone https://github.com/binary-husky/chatgpt_academic.git

cd chatgpt_academic

```

-2. 配置API_KEY和代理设置

+2. 配置API_KEY

+

+在`config.py`中,配置API KEY等[设置](https://github.com/binary-husky/gpt_academic/issues/1) 。

-在`config.py`中,配置 海外Proxy 和 OpenAI API KEY,说明如下

-```

-1. 如果你在国内,需要设置海外代理才能够顺利使用OpenAI API,设置方法请仔细阅读config.py(1.修改其中的USE_PROXY为True; 2.按照说明修改其中的proxies)。

-2. 配置 OpenAI API KEY。支持任意数量的OpenAI的密钥和API2D的密钥共存/负载均衡,多个KEY用英文逗号分隔即可,例如输入 API_KEY="OpenAI密钥1,API2D密钥2,OpenAI密钥3,OpenAI密钥4"

-3. 与代理网络有关的issue(网络超时、代理不起作用)汇总到 https://github.com/binary-husky/chatgpt_academic/issues/1

-```

(P.S. 程序运行时会优先检查是否存在名为`config_private.py`的私密配置文件,并用其中的配置覆盖`config.py`的同名配置。因此,如果您能理解我们的配置读取逻辑,我们强烈建议您在`config.py`旁边创建一个名为`config_private.py`的新配置文件,并把`config.py`中的配置转移(复制)到`config_private.py`中。`config_private.py`不受git管控,可以让您的隐私信息更加安全。)

3. 安装依赖

```sh

-# (选择I: 如熟悉python)推荐

+# (选择I: 如熟悉python)(python版本3.9以上,越新越好)

python -m pip install -r requirements.txt

# 备注:使用官方pip源或者阿里pip源,其他pip源(如一些大学的pip)有可能出问题,临时换源方法:python -m pip install -r requirements.txt -i https://mirrors.aliyun.com/pypi/simple/

@@ -123,14 +121,8 @@ python main.py

5. 测试函数插件

```

-- 测试Python项目分析

- (选择1)input区域 输入 `./crazy_functions/test_project/python/dqn` , 然后点击 "解析整个Python项目"

- (选择2)展开文件上传区,将python文件/包含python文件的压缩包拖拽进去,在出现反馈提示后, 然后点击 "解析整个Python项目"

-- 测试自我代码解读(本项目自译解)

- 点击 "[多线程Demo] 解析此项目本身(源码自译解)"

- 测试函数插件模板函数(要求gpt回答历史上的今天发生了什么),您可以根据此函数为模板,实现更复杂的功能

点击 "[函数插件模板Demo] 历史上的今天"

-- 函数插件区下拉菜单中有更多功能可供选择

```

## 安装-方法2:使用Docker

@@ -141,7 +133,7 @@ python main.py

# 下载项目

git clone https://github.com/binary-husky/chatgpt_academic.git

cd chatgpt_academic

-# 配置 “海外Proxy”, “API_KEY” 以及 “WEB_PORT” (例如50923) 等

+# 配置 “Proxy”, “API_KEY” 以及 “WEB_PORT” (例如50923) 等

用任意文本编辑器编辑 config.py

# 安装

docker build -t gpt-academic .

@@ -164,7 +156,6 @@ docker run --rm -it --net=host --gpus=all gpt-academic

docker run --rm -it --net=host --gpus=all gpt-academic bash

```

-

## 安装-方法3:其他部署方式(需要云服务器知识与经验)

1. 远程云服务器部署

@@ -176,14 +167,6 @@ docker run --rm -it --net=host --gpus=all gpt-academic bash

3. 如何在二级网址(如`http://localhost/subpath`)下运行

请访问[FastAPI运行说明](docs/WithFastapi.md)

-## 安装-代理配置

-1. 常规方法

-[配置代理](https://github.com/binary-husky/chatgpt_academic/issues/1)

-

-2. 纯新手教程

-[纯新手教程](https://github.com/binary-husky/chatgpt_academic/wiki/%E4%BB%A3%E7%90%86%E8%BD%AF%E4%BB%B6%E9%97%AE%E9%A2%98%E7%9A%84%E6%96%B0%E6%89%8B%E8%A7%A3%E5%86%B3%E6%96%B9%E6%B3%95%EF%BC%88%E6%96%B9%E6%B3%95%E5%8F%AA%E9%80%82%E7%94%A8%E4%BA%8E%E6%96%B0%E6%89%8B%EF%BC%89)

-

-

---

## 自定义新的便捷按钮 / 自定义函数插件

@@ -211,73 +194,11 @@ docker run --rm -it --net=host --gpus=all gpt-academic bash

详情请参考[函数插件指南](https://github.com/binary-husky/chatgpt_academic/wiki/%E5%87%BD%E6%95%B0%E6%8F%92%E4%BB%B6%E6%8C%87%E5%8D%97)。

----

-

-## 部分功能展示

-

-1. 图片显示:

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

- -多种大语言模型混合调用[huggingface测试版](https://huggingface.co/spaces/qingxu98/academic-chatgpt-beta)(huggingface版不支持chatglm)

-

-

---

## 安装-方法1:直接运行 (Windows, Linux or MacOS)

@@ -88,20 +90,16 @@ git clone https://github.com/binary-husky/chatgpt_academic.git

cd chatgpt_academic

```

-2. 配置API_KEY和代理设置

+2. 配置API_KEY

+

+在`config.py`中,配置API KEY等[设置](https://github.com/binary-husky/gpt_academic/issues/1) 。

-在`config.py`中,配置 海外Proxy 和 OpenAI API KEY,说明如下

-```

-1. 如果你在国内,需要设置海外代理才能够顺利使用OpenAI API,设置方法请仔细阅读config.py(1.修改其中的USE_PROXY为True; 2.按照说明修改其中的proxies)。

-2. 配置 OpenAI API KEY。支持任意数量的OpenAI的密钥和API2D的密钥共存/负载均衡,多个KEY用英文逗号分隔即可,例如输入 API_KEY="OpenAI密钥1,API2D密钥2,OpenAI密钥3,OpenAI密钥4"

-3. 与代理网络有关的issue(网络超时、代理不起作用)汇总到 https://github.com/binary-husky/chatgpt_academic/issues/1

-```

(P.S. 程序运行时会优先检查是否存在名为`config_private.py`的私密配置文件,并用其中的配置覆盖`config.py`的同名配置。因此,如果您能理解我们的配置读取逻辑,我们强烈建议您在`config.py`旁边创建一个名为`config_private.py`的新配置文件,并把`config.py`中的配置转移(复制)到`config_private.py`中。`config_private.py`不受git管控,可以让您的隐私信息更加安全。)

3. 安装依赖

```sh

-# (选择I: 如熟悉python)推荐

+# (选择I: 如熟悉python)(python版本3.9以上,越新越好)

python -m pip install -r requirements.txt

# 备注:使用官方pip源或者阿里pip源,其他pip源(如一些大学的pip)有可能出问题,临时换源方法:python -m pip install -r requirements.txt -i https://mirrors.aliyun.com/pypi/simple/

@@ -123,14 +121,8 @@ python main.py

5. 测试函数插件

```

-- 测试Python项目分析

- (选择1)input区域 输入 `./crazy_functions/test_project/python/dqn` , 然后点击 "解析整个Python项目"

- (选择2)展开文件上传区,将python文件/包含python文件的压缩包拖拽进去,在出现反馈提示后, 然后点击 "解析整个Python项目"

-- 测试自我代码解读(本项目自译解)

- 点击 "[多线程Demo] 解析此项目本身(源码自译解)"

- 测试函数插件模板函数(要求gpt回答历史上的今天发生了什么),您可以根据此函数为模板,实现更复杂的功能

点击 "[函数插件模板Demo] 历史上的今天"

-- 函数插件区下拉菜单中有更多功能可供选择

```

## 安装-方法2:使用Docker

@@ -141,7 +133,7 @@ python main.py

# 下载项目

git clone https://github.com/binary-husky/chatgpt_academic.git

cd chatgpt_academic

-# 配置 “海外Proxy”, “API_KEY” 以及 “WEB_PORT” (例如50923) 等

+# 配置 “Proxy”, “API_KEY” 以及 “WEB_PORT” (例如50923) 等

用任意文本编辑器编辑 config.py

# 安装

docker build -t gpt-academic .

@@ -164,7 +156,6 @@ docker run --rm -it --net=host --gpus=all gpt-academic

docker run --rm -it --net=host --gpus=all gpt-academic bash

```

-

## 安装-方法3:其他部署方式(需要云服务器知识与经验)

1. 远程云服务器部署

@@ -176,14 +167,6 @@ docker run --rm -it --net=host --gpus=all gpt-academic bash

3. 如何在二级网址(如`http://localhost/subpath`)下运行

请访问[FastAPI运行说明](docs/WithFastapi.md)

-## 安装-代理配置

-1. 常规方法

-[配置代理](https://github.com/binary-husky/chatgpt_academic/issues/1)

-

-2. 纯新手教程

-[纯新手教程](https://github.com/binary-husky/chatgpt_academic/wiki/%E4%BB%A3%E7%90%86%E8%BD%AF%E4%BB%B6%E9%97%AE%E9%A2%98%E7%9A%84%E6%96%B0%E6%89%8B%E8%A7%A3%E5%86%B3%E6%96%B9%E6%B3%95%EF%BC%88%E6%96%B9%E6%B3%95%E5%8F%AA%E9%80%82%E7%94%A8%E4%BA%8E%E6%96%B0%E6%89%8B%EF%BC%89)

-

-

---

## 自定义新的便捷按钮 / 自定义函数插件

@@ -211,73 +194,11 @@ docker run --rm -it --net=host --gpus=all gpt-academic bash

详情请参考[函数插件指南](https://github.com/binary-husky/chatgpt_academic/wiki/%E5%87%BD%E6%95%B0%E6%8F%92%E4%BB%B6%E6%8C%87%E5%8D%97)。

----

-

-## 部分功能展示

-

-1. 图片显示:

-

-

-多种大语言模型混合调用[huggingface测试版](https://huggingface.co/spaces/qingxu98/academic-chatgpt-beta)(huggingface版不支持chatglm)

-

-

---

## 安装-方法1:直接运行 (Windows, Linux or MacOS)

@@ -88,20 +90,16 @@ git clone https://github.com/binary-husky/chatgpt_academic.git

cd chatgpt_academic

```

-2. 配置API_KEY和代理设置

+2. 配置API_KEY

+

+在`config.py`中,配置API KEY等[设置](https://github.com/binary-husky/gpt_academic/issues/1) 。

-在`config.py`中,配置 海外Proxy 和 OpenAI API KEY,说明如下

-```

-1. 如果你在国内,需要设置海外代理才能够顺利使用OpenAI API,设置方法请仔细阅读config.py(1.修改其中的USE_PROXY为True; 2.按照说明修改其中的proxies)。

-2. 配置 OpenAI API KEY。支持任意数量的OpenAI的密钥和API2D的密钥共存/负载均衡,多个KEY用英文逗号分隔即可,例如输入 API_KEY="OpenAI密钥1,API2D密钥2,OpenAI密钥3,OpenAI密钥4"

-3. 与代理网络有关的issue(网络超时、代理不起作用)汇总到 https://github.com/binary-husky/chatgpt_academic/issues/1

-```

(P.S. 程序运行时会优先检查是否存在名为`config_private.py`的私密配置文件,并用其中的配置覆盖`config.py`的同名配置。因此,如果您能理解我们的配置读取逻辑,我们强烈建议您在`config.py`旁边创建一个名为`config_private.py`的新配置文件,并把`config.py`中的配置转移(复制)到`config_private.py`中。`config_private.py`不受git管控,可以让您的隐私信息更加安全。)

3. 安装依赖

```sh

-# (选择I: 如熟悉python)推荐

+# (选择I: 如熟悉python)(python版本3.9以上,越新越好)

python -m pip install -r requirements.txt

# 备注:使用官方pip源或者阿里pip源,其他pip源(如一些大学的pip)有可能出问题,临时换源方法:python -m pip install -r requirements.txt -i https://mirrors.aliyun.com/pypi/simple/

@@ -123,14 +121,8 @@ python main.py

5. 测试函数插件

```

-- 测试Python项目分析

- (选择1)input区域 输入 `./crazy_functions/test_project/python/dqn` , 然后点击 "解析整个Python项目"

- (选择2)展开文件上传区,将python文件/包含python文件的压缩包拖拽进去,在出现反馈提示后, 然后点击 "解析整个Python项目"

-- 测试自我代码解读(本项目自译解)

- 点击 "[多线程Demo] 解析此项目本身(源码自译解)"

- 测试函数插件模板函数(要求gpt回答历史上的今天发生了什么),您可以根据此函数为模板,实现更复杂的功能

点击 "[函数插件模板Demo] 历史上的今天"

-- 函数插件区下拉菜单中有更多功能可供选择

```

## 安装-方法2:使用Docker

@@ -141,7 +133,7 @@ python main.py

# 下载项目

git clone https://github.com/binary-husky/chatgpt_academic.git

cd chatgpt_academic

-# 配置 “海外Proxy”, “API_KEY” 以及 “WEB_PORT” (例如50923) 等

+# 配置 “Proxy”, “API_KEY” 以及 “WEB_PORT” (例如50923) 等

用任意文本编辑器编辑 config.py

# 安装

docker build -t gpt-academic .

@@ -164,7 +156,6 @@ docker run --rm -it --net=host --gpus=all gpt-academic

docker run --rm -it --net=host --gpus=all gpt-academic bash

```

-

## 安装-方法3:其他部署方式(需要云服务器知识与经验)

1. 远程云服务器部署

@@ -176,14 +167,6 @@ docker run --rm -it --net=host --gpus=all gpt-academic bash

3. 如何在二级网址(如`http://localhost/subpath`)下运行

请访问[FastAPI运行说明](docs/WithFastapi.md)

-## 安装-代理配置

-1. 常规方法

-[配置代理](https://github.com/binary-husky/chatgpt_academic/issues/1)

-

-2. 纯新手教程

-[纯新手教程](https://github.com/binary-husky/chatgpt_academic/wiki/%E4%BB%A3%E7%90%86%E8%BD%AF%E4%BB%B6%E9%97%AE%E9%A2%98%E7%9A%84%E6%96%B0%E6%89%8B%E8%A7%A3%E5%86%B3%E6%96%B9%E6%B3%95%EF%BC%88%E6%96%B9%E6%B3%95%E5%8F%AA%E9%80%82%E7%94%A8%E4%BA%8E%E6%96%B0%E6%89%8B%EF%BC%89)

-

-

---

## 自定义新的便捷按钮 / 自定义函数插件

@@ -211,73 +194,11 @@ docker run --rm -it --net=host --gpus=all gpt-academic bash

详情请参考[函数插件指南](https://github.com/binary-husky/chatgpt_academic/wiki/%E5%87%BD%E6%95%B0%E6%8F%92%E4%BB%B6%E6%8C%87%E5%8D%97)。

----

-

-## 部分功能展示

-

-1. 图片显示:

-

- -

- -

- -

- -

- -

- -

- -

- -

- -

- -

- -

- -

- -

- -

-

-

-